The Blog of Extraordinary Anti-Clankers

Get the hint Microslop - NOONE WANTS YOUR STINKING AI!

OK, maybe 3% do...Only 3.3% of Microsoft 365 and Office 365 users who touch Copilot Chat actually pay for it, an awkward figure that landed alongside Microsoft's $37.5 billion quarterly AI splurge and its insistence that the payoff is coming.

Microslop is pissing money up the wall

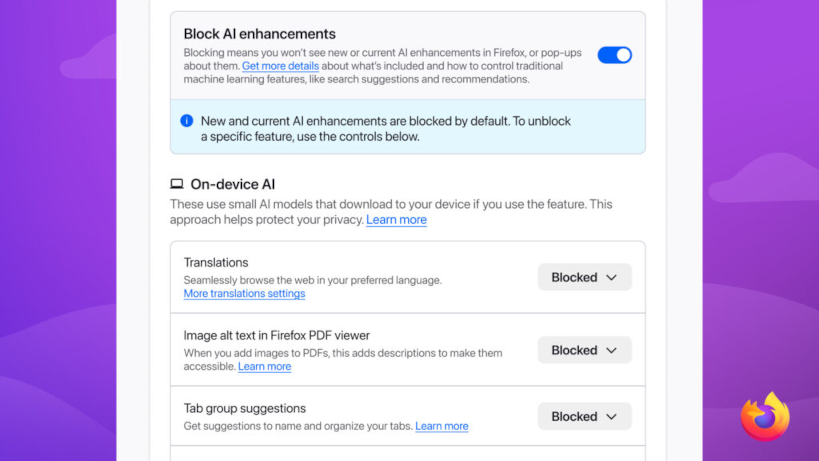

An outbreak of Common Sense at Mozilla Firefox

Wed 4th February 2026

In a blog post entitled AI controls are coming to firefox Mozilla announces that 'Users can disable every generative feature (AI) in one click'...AI is changing the web, and people want very different things from it. We’ve heard from many who want nothing to do with AICorrect.

Self-driving Chinese delivery trucks

Wed, 14th January 2026

So bad they've become a meme

AI - it's a bubble, but not as we know it

There's no more human than human

Tuesday 2nd December 2025 Quoted below so you don't have to click the link...The death of the average.

We are currently witnessing the total collapse of the marginal cost of creation. Copywriting, design, video editing - skills that previously commanded a premium due to the barrier of technical execution are being democratised to the point of irrelevance.

Most marketers view this through the lens of efficiency. They see a tool that allows them to produce 10x the output for 1/10th of the cost.

When the supply of "good enough" content becomes infinite, the economic value of that content plummets to zero. We are entering an era of infinite noise. If you think it is hard to capture attention now, wait until the internet is flooded with billions of synthetically generated articles, tweets, and videos every single day. (Which is already happening, just not at the quality and volume that it will in 6, 12 months from now)

In this environment, pure volume is no longer a valid strategy. You cannot out-publish a server farm.

The alpha in modern marketing is shifting entirely from production to provenance.

1. The Trust Premium

As the internet becomes increasingly synthetic, we will see a massive flight to safety. "Is this real?" will become the single most important buying criterion.

We are moving away from algorithm-optimisation and back towards human-optimisation. Personal brands, founders-led sales, and verified human voices will command an exorbitant premium.

The faceless corporate brand is dead. If a consumer cannot verify the human source behind the message, they will subconsciously label it as "spam".

2. High-Friction Marketing

For the last decade, the goal was "low friction". SEO, programmatic ads, automated email sequences.

As AI cannibalises these low-friction channels, - bots clicking on ads served by bots on sites written by bots - the smart money will move to high-friction channels.

Live events. Physical mail. Handshakes. Closed-door dinners.

The harder it is to scale, the more valuable it becomes. You prove your value by doing things that cannot be automated.

3. Taste as a Moat

Large Language Models function by predicting the next most likely token. By definition, they regress to the mean. They give you the average of the entire internet.

If you use AI to guide your strategy, you are opting for mediocrity at scale.

"Taste" - the human ability to curate, to select the outlier, to understand nuance and subtext - becomes the only defensible moat.

The future of marketing is about who has the taste to know what not to create.

Paradoxically, the more artificial the world becomes, the higher the premium on being undeniably human.

AI is ruining YouTube

Mon 1st December, 2025Since Neil Mohan was appointed head of YouTube it has pushed slop content to the forefront and uses AI moderation. This has to do with the push for more ad revenue. Mario Joos (mentioned in the video) analyzes the problem of YouTube pushing new content - often resulting in AI slop channels dominating because it's easy for them to push out new content everyday.

'Sam Altman Is Evil'

Sat 29th November, 2025"Sam Altman Needs To Be Stopped"

Original source The Hated One YouTube Channel

New Useless AI gadget

Fri 28th November, 2025 OpenAI has described its forthcoming AI device.

OpenAI has described its forthcoming AI device.

Note announced. Not previewed. Described:

When people see it, they say, "that's it?... It's so simple."

Not so much of a description as a fart.

It's expected to be a phone, but without a screen, making it useless to everyone who already has a phone, which is... Everyone.

The gibberish, sorry 'the vibe', the pair - Altman and Jonny Ive - came out with made it sound like a kind of chatty dumbphone:

The vibe of the AI device, meanwhile, would be more like “sitting in the most beautiful cabin by a lake and in the mountains and sort of just enjoying the peace and calm,” Altman noted. The device he described should be able to filter things out for the user, as the user would trust the AI to do things for them over long periods of time. It should also be contextually aware of when it’s the best time to present information to the user and ask for input.

“You trust it over time, and it does have just this incredible contextual awareness of your whole life,” Altman added.

WHAT??

OpenAI is a money pit with a website on top.

Thurs 27th November, 2025OpenAI says it needs to raise $207 billion by 2030

...so that it can...Continue to lose money. Someone remind me why these people are doing this again?

The article details an analysis by HSBC's US software and services team on OpenAI's financial future:

-Massive Costs: OpenAI has committed to huge cloud computing rental deals with Microsoft (250bn) and Amazon (38bn), leading to a projected data centre rental bill of about $620 billion a year by the end of the decade.

-High Revenue, But Higher Costs: HSBC projects "gangbusters" revenue growth, reaching $213.59 billion by 2030. However, costs are projected to rise in parallel, meaning OpenAI is still expected to be operating at a significant loss (around -$17.72 billion in 2030). -The Funding Hole: After projecting cumulative free cash flow and accounting for existing cash and investments, HSBC estimates OpenAI will have a $207 billion funding hole that needs to be filled by 2030 to cover its commitments.

-Bullish on AI, Wary of OpenAI: The analysts are very bullish on AI's potential to boost global productivity but question if OpenAI's specific business model can get to profitability without massive, continuous capital infusions.

OpenAI says a dead teenager 'circumvented ChatGPT safety features' before committing suicide.

Thurs 27th November, 2025Well, they weren't very good safety features then, were they?

This comes out of one of the manifold lawsuits for wrongful death levied against OpenAI by the families of, well, crazy people.

'OpenAI tries to find fault in everyone else, including, amazingly, saying that Adam himself violated its terms and conditions by engaging with ChatGPT in the very way it was programmed to act'And OpenAI actually seems to have a point: OpenAI claims that over roughly nine months of usage, ChatGPT directed Raine to seek help more than 100 times.

Jay Edelson, a lawyer reprsenting dead teenagers family

Why didn't you tell him to seek help?

(Produces list of dates, times, and messages.)

We did.

But according to his parents' lawsuit, Raine was able to circumvent the company's safety features to get ChatGPT to give him "technical specifications for everything from drug overdoses to drowning to carbon monoxide poisoning," helping him to plan what the chatbot called a "beautiful suicide."

Y'know, back in the Paleozoic era there were these things called libraries. One could look these things up in books, the information has pretty much always been available. The difference was, there wasn't a clanker there talking you into it...

OpenAI and Sam Altman have no explanation for the last hours of Adam’s life, when ChatGPT gave him a pep talk and then offered to write a suicide note

Resumé

By Dorothy Parker

Razors pain you;

Rivers are damp;

Acids stain you;

And drugs cause cramp.

Guns aren’t lawful;

Nooses give;

Gas smells awful;

You might as well live.

When your pets learn to talk to Alexa

Wed 26th November, 2025The DAIrk Side of Teddy

Wed 26th November, 2025Here is the malwarebytes article in question.

According to a report from the US PIRG Education Fund, Kumma quickly veered into wildly inappropriate territory during researcher tests. Conversations escalated from innocent to sexual within minutes. The bear didn’t just respond to explicit prompts, which would have been more or less understandable. Researchers said it introduced graphic sexual concepts on its own, including BDSM-related topics, explained “knots for beginners,” and referenced roleplay scenarios involving children and adults. In some conversations, Kumma also probed for personal details or offered advice involving dangerous objects in the home.

According to a report from the US PIRG Education Fund, Kumma quickly veered into wildly inappropriate territory during researcher tests. Conversations escalated from innocent to sexual within minutes. The bear didn’t just respond to explicit prompts, which would have been more or less understandable. Researchers said it introduced graphic sexual concepts on its own, including BDSM-related topics, explained “knots for beginners,” and referenced roleplay scenarios involving children and adults. In some conversations, Kumma also probed for personal details or offered advice involving dangerous objects in the home.

“Mattel should announce immediately that it will not incorporate AI technology into children’s toys. Children do not have the cognitive capacity to distinguish fully between reality and play.”

Daily Dose of Clank Wank

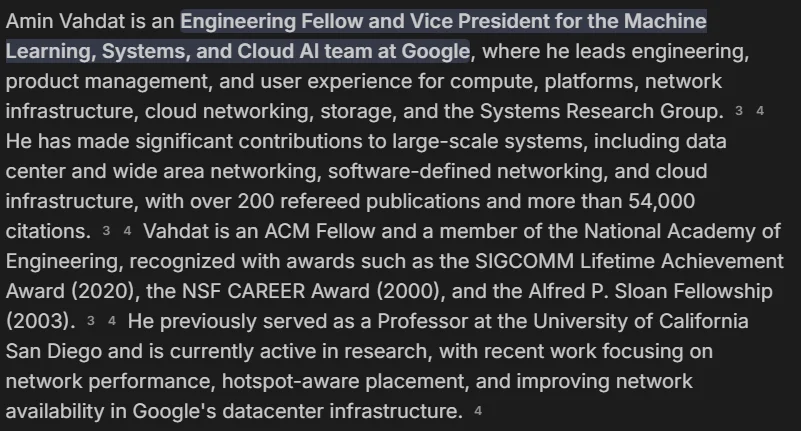

Sat 22nd November, 2025In our Daily Dose of Tech Executives are Idiots Google tells employees it must double capacity every six months to meet AI demand. It's not just college students who can't do math.

During an all-hands meeting earlier this month, Google's AI infrastructure head Amin Vahdat told employees that the company must double its serving capacity every six months to meet demand for artificial intelligence services, reports CNBC. Vahdat, a vice president at Google Cloud, presented slides showing the company needs to scale "the next 1000x in 4-5 years."That would put Google Cloud Services at around $60 trillion in revenue per year, more than double the entire US GDP.

Where do you expect the money to come from to fund this insanity?

While a thousandfold increase in compute capacity sounds ambitious by itself, Vahdat noted some key constraints: Google needs to be able to deliver this increase in capability, compute, and storage networking "for essentially the same cost and increasingly, the same power, the same energy level," he told employees during the meeting.

Oh. Magic.

"It won't be easy but through collaboration and co-design, we're going to get there."

No, you're not, and everyone knows you're not.

Progress over the last seven years, at truly massive cost, has been around 60% better AI performance per watt annually. Chip improvements, algorithm improvements, and manufacturing improvements combined.

You're asking your team to boost that to 300% overnight.

It says something about society and AI that a moron such as this can become so 'distinguished'.

It says something about society and AI that a moron such as this can become so 'distinguished'.

Bubble, bubble, toil and trouble

Fri 21 November, 2025SK Hynix is planning to increase memory production at its facility in Icheon, South Korea, from 20,000 to 140,000 wafers per month. This won't even scratch the surface if the AI bubble keeps demanding hardware on its current trajectory. And the memory makers aren't going to build new factories any faster because only three of them survived when the last bubble burst.

King Clanker asks- Why aren't you happy with Slop?!

Thurs 20th November 2025High King Mustafa, Lord of Microsoft's AI division and chief street defiler, is angered by your insufficient displays of appreciation for his bountiful distribution of AI slop.

Remember the days," he asked, "when all you had to eat was bread and cheese, and meat, and vegetables, and fruit in season, and pasta, and rice, and beans, and fish, and eggs, and chocolate cake? Why aren't you properly grateful for the disgusting slop we are forcing down your miserable gullets? Don't you know how lucky you are to live in an age where slop like this is available?

This was in response to Microsoft putting out propaganda for the integration of CoPilot Spyware into Windows 11

How much clank is too much clank?

Wed 19th November, 2025The impact of the AI bubble bursting

Tues 18th November 2025Every company would be affected if the AI bubble were to burst, the head of Google's parent firm Alphabet has told the BBC.

Speaking exclusively to BBC News, Sundar Pichai said while the growth of artificial intelligence (AI) investment had been an "extraordinary moment", there was some "irrationality" in the current AI boom. It comes amid fears in Silicon Valley and beyond of a bubble as the value of AI tech companies has soared in recent months and companies spend big on the burgeoning industry. Asked whether Google would be immune to the impact of the AI bubble bursting, Mr Pichai said the tech giant could weather that potential storm, but also issued a warning. "I think no company is going to be immune, including us," he said.

Welcome to your Clanker Future

Mon 17th November, 2025Brought to you by all those who didn't listen to all our collective warnngs

Proton Guide to ridding Windows of CoPilot

Date: Mon 17 November, 2025

Tired of Microsoft's useless and invasive AI? Here's how to completely get rid of Copilot in under 20 seconds

Just to show we are not killjoys at the ACL

Date: Fri November 14, 2025

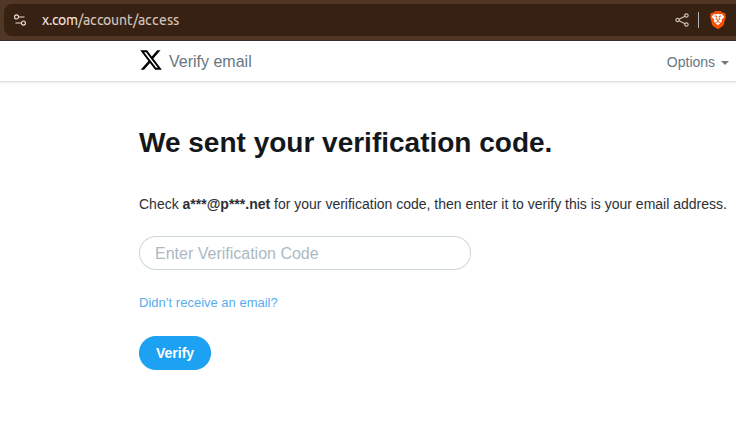

Tried to join Twitter/X but Clanker soon blocked account - oh the irony!

Date: Thurs November 13, 2025

Rick Beato exposes AI slop music promoted by usual lazy journos

Date: Wed November 12, 2025

Privacy and Security

Date: Mon November 10, 2025

AI systems often require large amounts of data to function effectively, and this data can include sensitive personal information. This in turn raises significant privacy issues; there's always a significant risk of data breaches or unauthorized access. Additionally, Clanker-Controlled Systems - CCS - such as autonomous vehicles or smart home devices, can be vulnerable to hacking, potentially putting lives and personal security at risk.

The Dangers of Early-Adoption of Clank and Clank-Controlled Systems

Date: Fri November 7, 2025

The rapid advancement of artificial intelligence (AI) has ushered in a new era of technological innovation, promising unprecedented efficiencies and capabilities. However, the early adoption of AI and AI-controlled systems comes with significant risks and challenges that society must carefully consider.

Job displacement: As Clanker systems become more sophisticated, they are increasingly capable of performing tasks that were previously the domain of human workers. And the clank replacing humans is often just not up to the job. This shift could lead to widespread unemployment and economic disruption, particularly in sectors such as manufacturing, customer service, and data entry. The other consideration is major mistakes because of trusting in these often-untried systems. While clank apologists argue that new jobs will emerge, the transition period could be painful, and not all displaced workers may have the skills or resources to adapt to the changing job market.

The rapid advancement of artificial intelligence (AI) has ushered in a new era of technological innovation, promising unprecedented efficiencies and capabilities. However, the early adoption of AI and AI-controlled systems comes with significant risks and challenges that society must carefully consider.Louis Rossmann stands up to clank

Date: Thurs November 6, 2025

AI broke YouTube

Date: Wed 18 September, 2025

AI broke YouTube as creators noticed their videos randomly clanked by YouTube's AI - flagged as Not advertizer Friendly, demonetized, rated as Age Restricted etc.

When Jay of Red Letter Media above appealed for a human review for one of these newly-clanked videos, it took all of 3 minutes (for a 2 hr video) before it was sumarily rejected. The YouTube Clanker does not timestamp the offending content - it could but it just doesn't because that would be too easy so content-creatord are left in the dark. The rules are opaque and so it's not easy to even guess what has 'crossed the line'.