About the Anti Clanker League

We are anti-Clanker, not anti-AI. A group of disparate but passionate individuals - neither wholly 'Left' or 'Right' - yet dedicated to warning of the pitfalls of all-at-once AI adoption. We take aim at clank, clankers and clankists.

"Clanker" reflects a broader cultural skepticism towards AI, skepticism about the potential for AI to replace human creativity and intuition. It highlights the ongoing debate about the capabilities and limitations of AI. Clankers cannot truly mimic or replace human intelligence.

Join us in our mission to make a difference and create a better future for all, where AI is our servant and not our master. In essence, "clanker" is a colorful and somewhat playful way to express frustration or disbelief in the face of AI technologies that fail to live up to their hype or expectations.

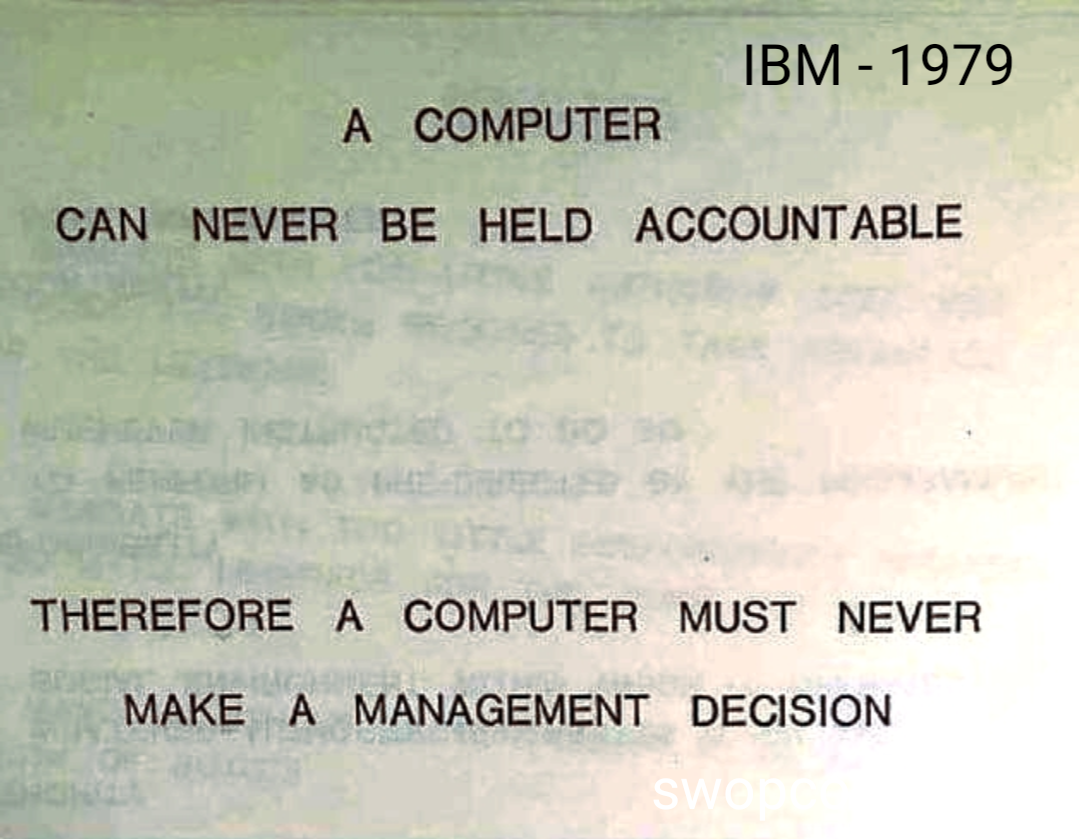

Accountability

AI decision-making is plagued with lack of transparency and accountability in . Many clanker systems, particularly those based on complex machine learning models, operate as "black boxes," making it difficult to understand how they arrive at their conclusions.

Humans > Clankers

As AI systems become more integrated into our daily lives, there is a risk of becoming over-reliant on their outputs, leading to a loss of human judgment and critical thinking skills. Over-reliance is particularly dangerous in high-stakes situations, such as military operations or emergency response, where human intuition and ethical reasoning are crucial. Early adoption of Clank and CCS offers exciting possibilities according to clankists, it actually presents significant challenges that must be addressed but have not been thus far. Society must strike a balance between harnessing the benefits of AI and mitigating its potential dangers, ensuring that these technologies are developed and deployed in a way that is ethical, transparent, and beneficial to all. At the moment this is not happening at all!